Caching

Any contents that are displayed within the browser, they are almost all resources fetched over the network. Fethching process goes through multiple steps such that it's often considered slow and expensive for following reasons, but not limited to :

- Roundtrip to get response after request is sent might take a long time due to large response or slow network

- Multiple roundtrips might be required

- Nothing can be displayed until all critical resources are ready

- Slow content loading can lead to poor user experience

However, some resources are rather static and chances of changing are low. This gives us an option to optimize the process with caching mechanism to enhance reusability. This not only enables faster response on the client side, but also lowers the server load since less request are received.

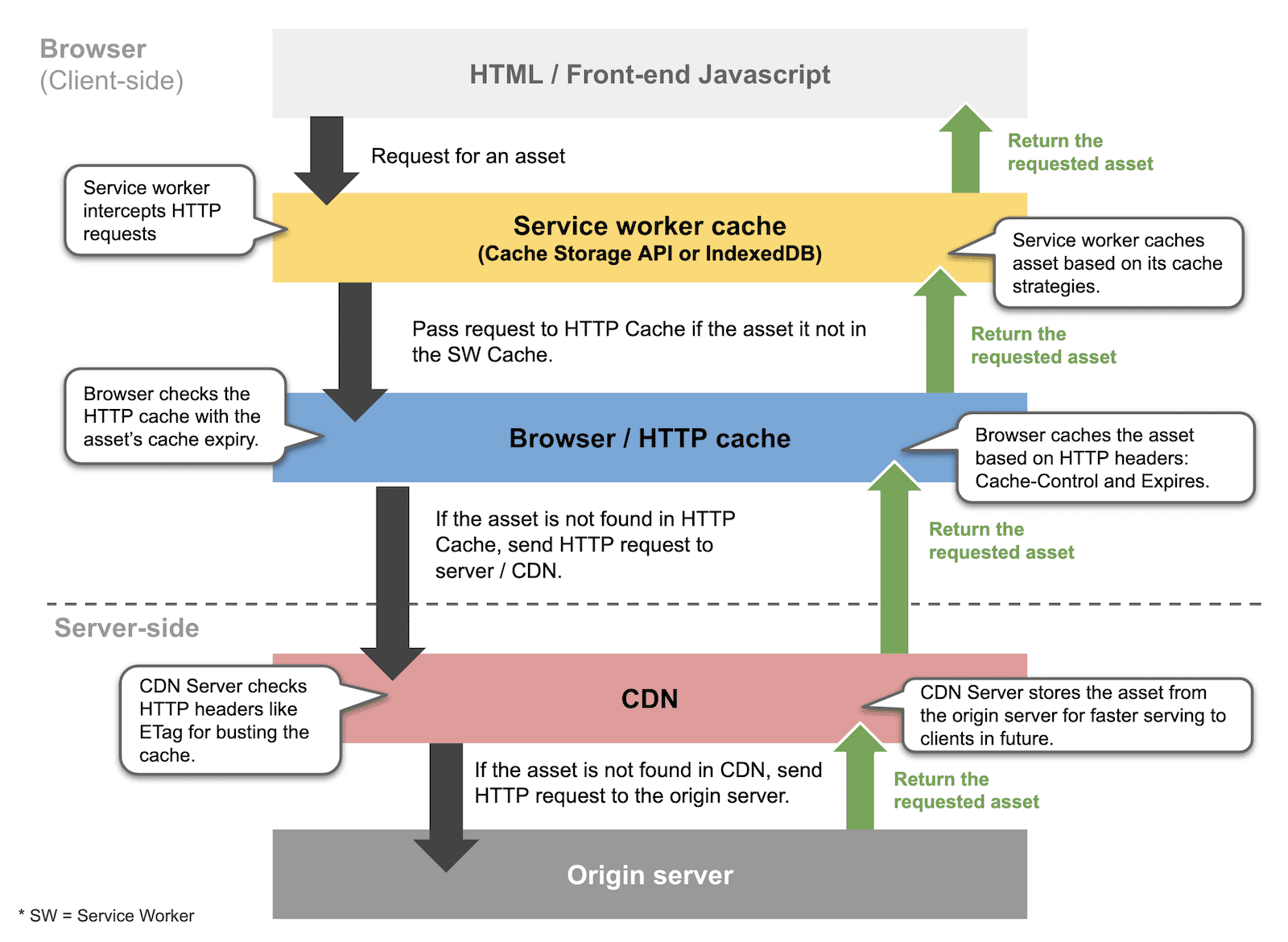

Since the resouce fetching is a mutli-step process, different cache types can be introduced accordingly.

From a high-level perspective, a request sent from browser goes through a sequence of cache check as shown below:

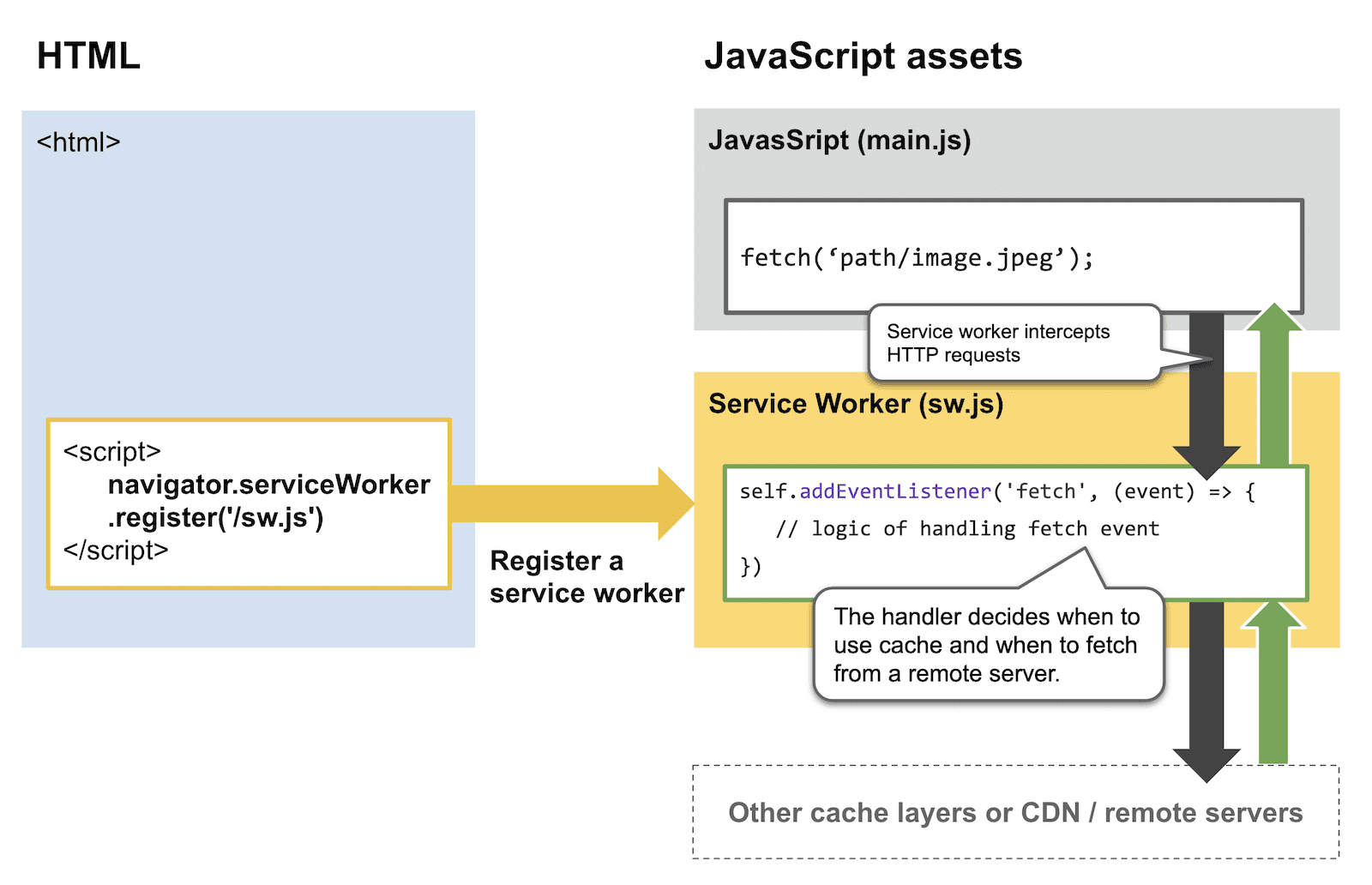

Service Worker Cache

A service worker intercepts network-type HTTP requests and uses a caching strategy to determine what resources should be returned to the browser. The service worker cache has following advantages:

- Offers more caching capabilities, such as fine-grained control over what is cached and how caching is done.

- More memory and storage space for same origin since the browser allocates HTTP cache resources on a per-origin basis.

- Higher flexibility with flaky networks or offline experiences, combined with caching strategies such as SWR.

Some common caching strategies used in service worker cache are: Network only, Network falling back to cache, Stale-while-revalidate, Cache first, fall back to network, Cache only. Comparison and more details can be read here.

To utilize service worker, it is recommended to use popular libraries such as Workbox.

import {registerRoute} from 'workbox-routing';

registerRoute(new RegExp('styles/.*\\.css'), callbackHandler);

HTTP Cache (a.k.a Browser Cache)

In browser, HTTP requests are all first routed to the browser cache to check if a cached response is valid for the specified request. If there's a match, the response is read from the cache; otherwise, it's sent to the server.

The HTTP cache's behavior is then configured with request headers and response headers.

For request headers, it is usuall encouraged to stick with the default as browser almost alwyas takes care of setting them before making the requests. If manually setup is required to have more control the caching behaviour, passing custom Request object with cache setting configured to the fetch API to send request is an alternative.

As for response headers, it can be set on the server side. Great care must be taken since different servers have different behaviours on the header setup, where some have built-in setup with default values and others simply leave them out entirely. Misconfigured cache can not only cause redundant network calls and impact server performance, but also might potentially cause data leakage.

Overall, HTTP caching is simpler than service worker caching, because HTTP caching only deals with time-based (TTL) resource expiration logic.

Aspects of HTTP related to caching has been specified in the RFC 9111.

Types of HTTP cache

There are two main types of caches defined in the RFC 9111: private caches and shared caches.

- Private cache: Caches that are tied to a specific user, typically a browser cache. It's often used to store personalized

- Shared cache: Caches that are ocated between the client and the server and can store responses that can be shared among users. These type of caches can be further sub-classified into proxy caches and managed caches.

- Managed cache: Caches that are explicitly deployed by service developers to offload the origin server and to deliver content efficiently. Examples include reverse proxies, CDNs, and service workers in combination with the Cache API.

- Proxy cache: Caches that are implemented by proxies to reduce traffic out of the network. This is usually not managed by the service developer, so it must be controlled by appropriate HTTP headers.

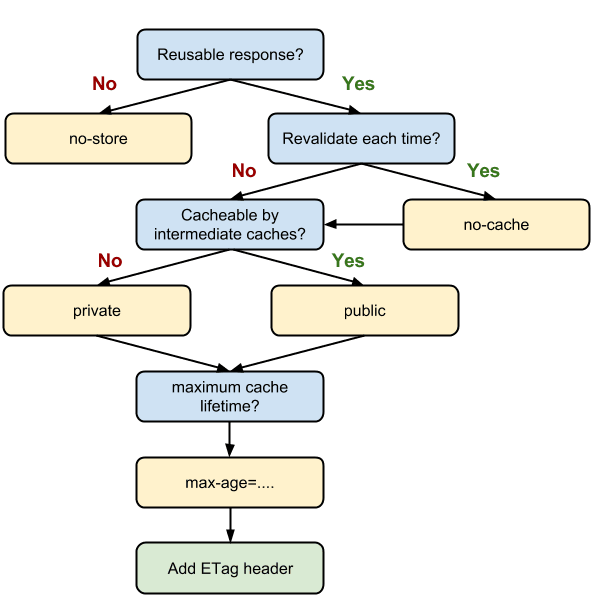

Controling HTTP caching behavior

To properly configure the HTTP caching setup, HTTP headers that servers add to the response matters the most. Available includes: Cache-Control, E-Tag, Last-Modified, Expires, Age, Pragma(Deprecated), Vary, etc., where the first three are mostly used.

Cache-Control

Cache-Control header can be included in the response to specify how, and for how long, the browser and other intermediate caches should cache the individual response. Multiple directives can be combined with the , separator and is suggested to be written in lowercase for backward compatibility, since some

ETag

ETag response header contains an arbitrary value, generated by the server where method is not designated, is used to perform revalidation on cache stale.

More on how HTTP cache behaviour can be controlled through request header, read here.

Heuristic Caching

Responses, however, will still get cached if certain conditions are met when the Cache-Control header is not given, where the browser will try to [guess(https://www.mnot.net/blog/2017/03/16/browser-caching#heuristic-freshness)] the most effective way to cache the current type of response content.

Memory Cache

Memory cache is a browser-dependent implementation of caching layered in front of the service worker cache. This kind of cache is often used to store copies of resources (such as images, scripts, and stylesheets) that are currently in use or were recently used. Its primary advantage is the access speed, but this makes the memory cache volatile since they are preserved only while the browser tab is open and are typically cleared when the tab is closed.

Comparison

The Glitch below demonstrates how service worker caching and HTTP caching work in action across different scenarios:

References

- Service worker caching and HTTP caching

- RFC 9111 -- HTTP Caching

- HTTP Caching -- Mdn Web Docs

- Prevent unnecessary network requests with the HTTP Cache -- web.dev

- 循序漸進理解 HTTP Cache 機制